Gemini AI Under Attack – How Hackers Use Prompts To Steal And Clone AI Models

Google’s Gemini AI is facing a new kind of cyber threat: attackers are trying to copy its behaviour using carefully crafted prompts instead of traditional hacking tools. These attacks, called distillation or model extraction attacks, aim to replicate Gemini’s capabilities and logic without ever breaching Google’s internal systems.

What are distillation and model extraction attacks?

In a distillation or model extraction attack, hackers use legitimate access to an AI model and send it a very large number of queries, often automatically generated. By recording the outputs and patterns from those responses, they try to train a separate model that behaves similarly to the original one.

Unlike classic cyberattacks that exploit software vulnerabilities, these attacks focus on learning from the model’s own answers. Over time, the attacker’s cloned model can mimic key features, reasoning style, and responses of the original system, effectively copying its most valuable intellectual property.

How hackers targeted Google’s Gemini AI

According to Google’s latest report, adversaries used more than 100,000 AI‑generated prompts to probe the Gemini AI model. This massive wave of automated queries was designed to map out how Gemini responds across different tasks, topics, and edge cases.

Attackers relied on “legitimate access,” meaning they did not need to break in or bypass security controls to interact with the system. Instead, they systematically flooded the model with prompts to extract enough information to reconstruct its capabilities inside separate models under their control.

Who is behind these attacks?

Google links this activity to state‑aligned or state‑positioned actors from countries including China, Russia, and North Korea. These groups are increasingly involved in digital espionage campaigns focused on advanced technologies such as large language models and AI infrastructure.

The motivation goes beyond curiosity. Stealing or replicating a powerful commercial AI model can save governments or organisations huge research and development costs. It can also accelerate their own AI programmes, allowing them to compete with or undermine global leaders in the field.

Why Gemini AI’s attacks matter for the AI industry

Google emphasizes that these attacks do not directly endanger ordinary users in their daily interactions with Gemini. However, they pose a serious risk to AI developers and service providers because they target the core technology that makes these systems valuable.

If attackers successfully clone a model’s capabilities, they can deploy copycat systems without investing in training, safety testing, or governance. This undermines fair competition and can lead to unsafe or unregulated clones of powerful models circulating in the wild.

Expert warning: “Canary in the coal mine”

John Hultquist, chief analyst for the Google Threat Intelligence Group, described Google as “the canary in the coal mine” for this kind of AI theft. His comment suggests that Gemini may be one of the first major targets, but other leading AI developers are likely to face similar attacks soon.

As global competition in AI intensifies, attacks on commercial models will probably become more frequent and more sophisticated. The Gemini case is therefore an early warning sign for the entire AI ecosystem.

Rising global AI competition and IP risks

The disclosure comes at a time when countries and companies are racing to build more advanced AI systems. Chinese firms like ByteDance, for example, are pushing forward with new video generation tools such as Seedance 2.0, highlighting how fast the field is moving.

In this climate, intellectual property related to AI models—architecture, training data, tuning methods, and behaviour—has become extremely valuable. Google’s report notes that distillation attacks are now a prime concern because they directly threaten that intellectual property by attempting to copy model capabilities at scale.

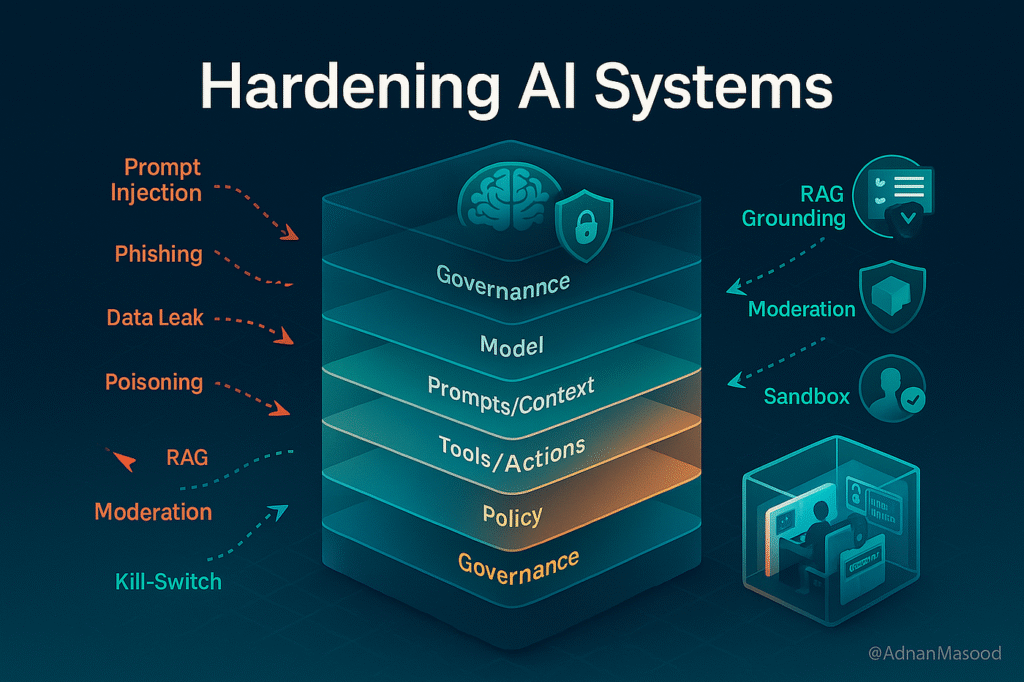

How AI providers can respond

To defend against model extraction and distillation attacks, AI providers need more than traditional cybersecurity controls. They must monitor unusual query patterns, rate‑limit suspicious activity, and design systems that make it harder to reverse‑engineer model behaviour.

Additionally, legal and policy frameworks around AI intellectual property will likely become more important. Stronger rules and enforcement mechanisms could help deter state‑aligned groups and organisations from attempting to clone proprietary AI models through prompt‑based attacks.

What this means for users and developers

For everyday users, Google stresses that these attacks do not currently translate into a direct personal security threat when using Gemini services. However, the long‑term impact could be significant if cloned models are deployed without safety guardrails or oversight.

For developers and AI companies, the message is clear: protecting the behaviour of models is now just as important as securing their infrastructure. As prompt‑driven attacks grow, model extraction and distillation defenses will need to become a standard part of any serious AI security strategy.

Final Verdict: Gemini AI Under Attack And The Urgent Need To Stop Prompt‑Based Cloning

Gemini AI under attack – how hackers use prompts to steal and clone AI models is now a real‑world warning for the entire AI industry. The ongoing case of Gemini AI under attack – how hackers use prompts to steal and clone AI models shows that protecting model behaviour and training data is just as important as traditional cybersecurity.

For Google and other providers, Gemini AI under attack – how hackers use prompts to steal and clone AI models is a clear signal to monitor traffic carefully, limit automated querying and strengthen defenses against model extraction attacks.